2025

NeurIPS

NeurIPSFUDOKI: Discrete Flow-based Unified Understanding and Generation via Kinetic-Optimal Velocities

Jin Wang*, Yao Lai*, Aoxue Li, Shifeng Zhang, Jiacheng Sun, Zhenguo Li, Ping Luo (* equal contribution)

Conference on Neural Information Processing Systems (NeurIPS) 2025 Spotlight

FUDOKI is a novel unified multimodal model that replaces traditional autoregressive architectures with discrete flow matching, enabling more flexible and effective visual understanding and image generation with performance comparable to state-of-the-art models.

NeurIPS

NeurIPS

AAAI

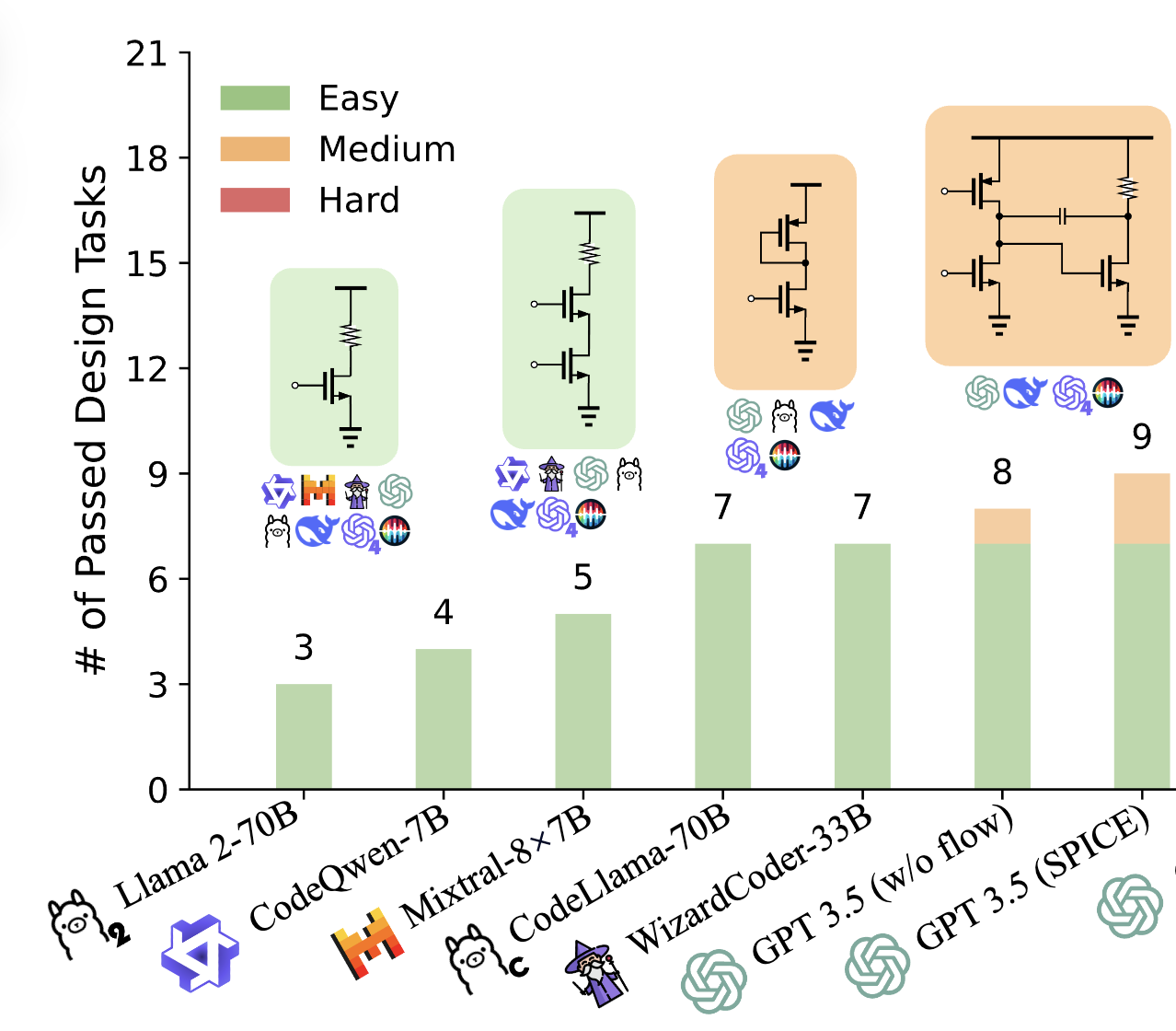

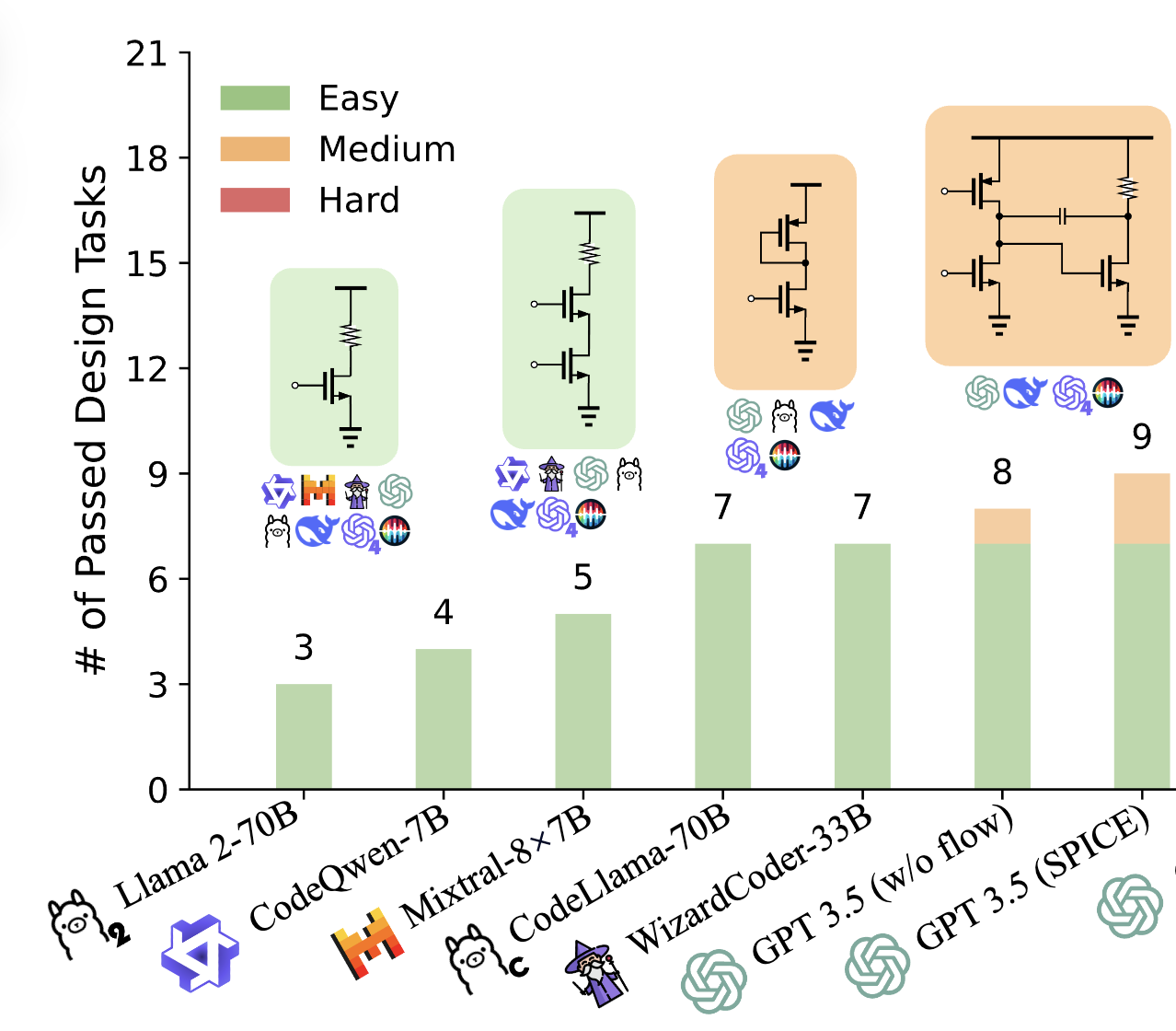

AAAIAnalogCoder: Analog Circuit Design via Training-Free Code Generation

Yao Lai, Sungyoung Lee, Guojin Chen, Souradip Poddar, Mengkang Hu, David Z. Pan, Ping Luo

AAAI Conference on Artificial Intelligence (AAAI) 2025 OralAAAI 2025 Top-15 Influential Papers (Rank 11)

AnalogCoder is a training-free LLM agent for analog circuit design, using feedback-driven prompts and a circuit library to achieve high success rates, outperforming GPT-4o by designing various circuits.

AAAI

AAAI

2024

NeurIPS

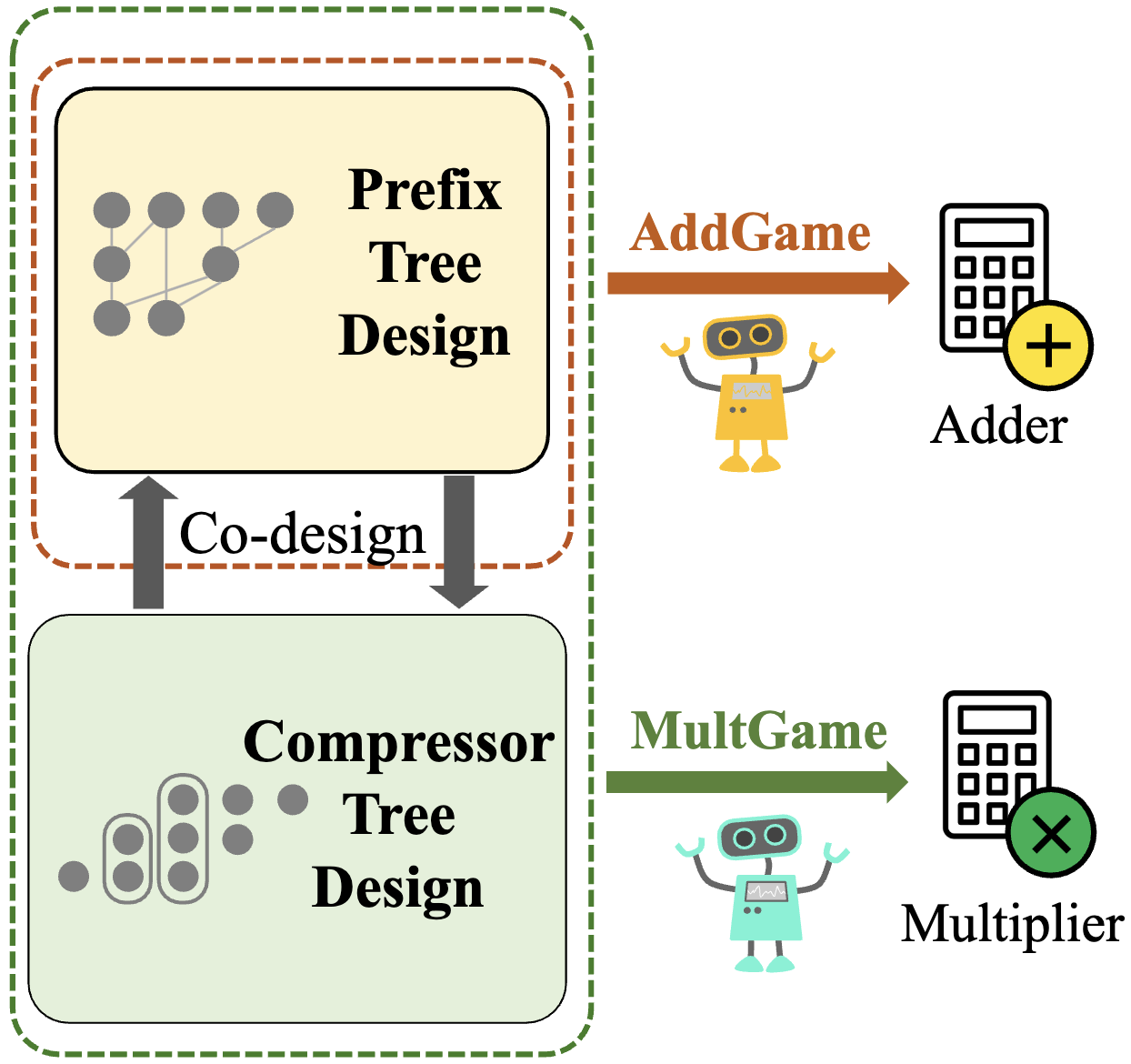

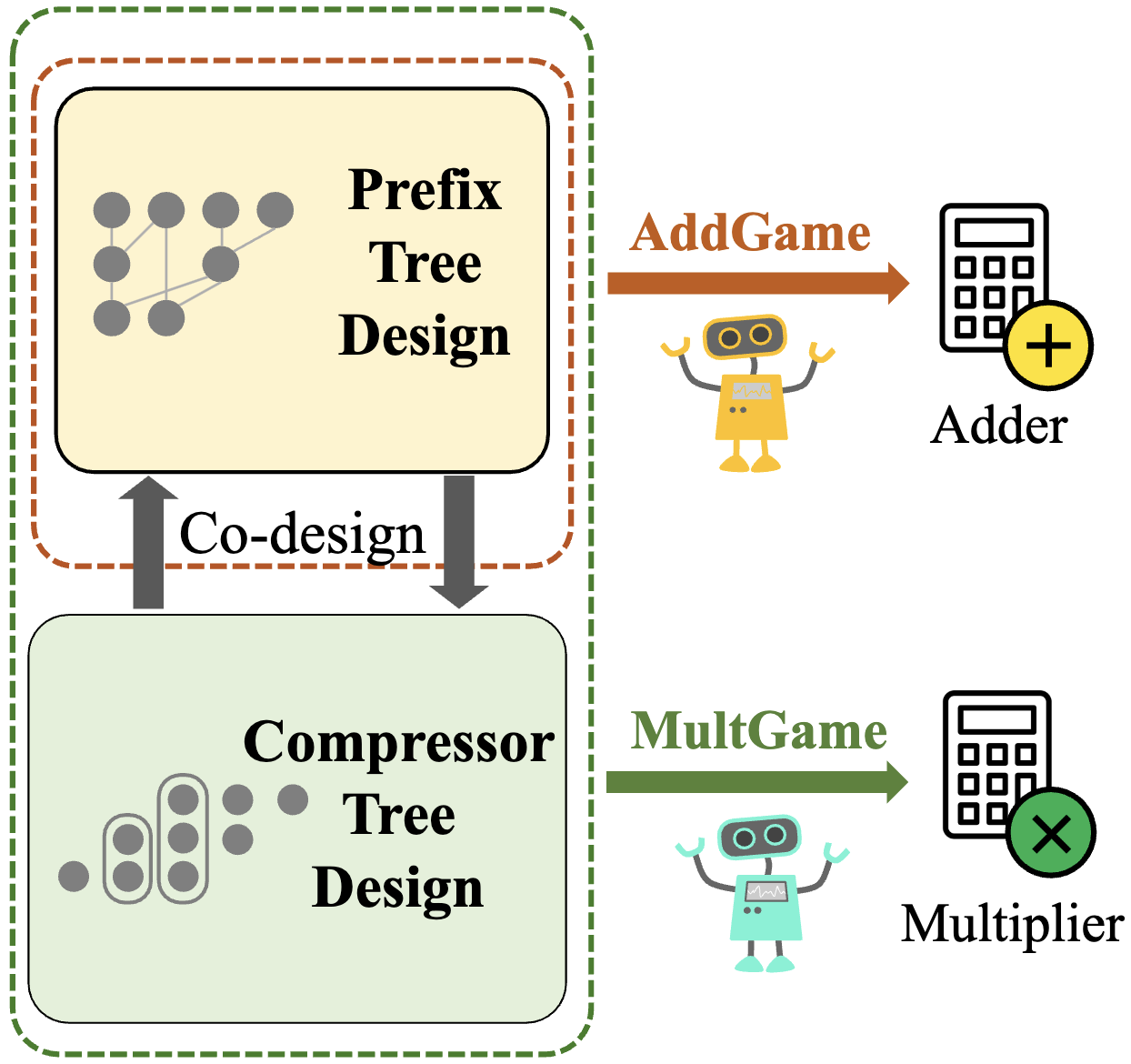

NeurIPSScalable and Effective Arithmetic Tree Generation for Adder and Multiplier Designs

Yao Lai, Jinxin Liu, David Z. Pan, Ping Luo

Conference on Neural Information Processing Systems (NeurIPS) 2024 Spotlight

This work uses reinforcement learning to optimize adder and multiplier designs as tree generation tasks, achieving up to 49% faster speed and 45% smaller size, with scalability to 7nm technology.

NeurIPS

NeurIPS

2023

ICML

ICMLChiPFormer: Transferable Chip Placement via Offline Decision Transformer

Yao Lai, Jinxin Liu, Zhentao Tang, Bin Wang, Jianye Hao, Ping Luo

International Conference on Machine Learning (ICML) 2023

ChiPFormer is an offline RL-based method that achieves 10x faster chip placement with superior quality and transferability to unseen circuits.

ICML

ICML

2022

NeurIPS

NeurIPSMaskPlace: Fast Chip Placement via Reinforced Visual Representation Learning

Yao Lai, Yao Mu, Ping Luo

Conference on Neural Information Processing Systems (NeurIPS) 2022 Spotlight

MaskPlace is a method that leverages pixel-level visual representation for chip placement, achieving superior performance with simpler rewards, 60%-90% wirelength reduction, and zero overlaps.

NeurIPS

NeurIPS