University of Cambridge

University of CambridgeI am a Postdoctoral Research Associate at the University of Cambridge, working with Prof. Robert Mullins. I received my Ph.D. in Computer Science from the University of Hong Kong (HKU), where I was affiliated with mmlab@HKU and advised by Prof. Ping Luo. During my Ph.D., I also collaborated with the UTDA Lab at The University of Texas at Austin, under the guidance of Prof. David Z. Pan (IEEE/ACM Fellow). Previously, I obtained my M.Eng. degree from the Software School at Tsinghua University, advised by Prof. Xiaojun Ye, and my B.Eng. degree from the Department of Microelectronics at Fudan University, advised by Prof. Xuan Zeng and Prof. Minge Jing. My research interests include AI for Electronic Design Automation (AI4EDA), AI for security, and related applications.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

The University of Hong KongPh.D. in Computer ScienceSep. 2021 - Nov. 2025

The University of Hong KongPh.D. in Computer ScienceSep. 2021 - Nov. 2025 -

Tsinghua UniversityM.Eng. in Software EngineeringSep. 2017 - Jul. 2020

Tsinghua UniversityM.Eng. in Software EngineeringSep. 2017 - Jul. 2020 -

Fudan UniversityB.Eng. in Electronics EngineeringSep. 2013 - Jul. 2017

Fudan UniversityB.Eng. in Electronics EngineeringSep. 2013 - Jul. 2017

Experience

-

University of CambridgePostdoctoral Research AssociateDec. 2025 - Present

University of CambridgePostdoctoral Research AssociateDec. 2025 - Present -

The University of Texas at AustinVisiting PhD StudentFeb. 2024 - Jul. 2024

The University of Texas at AustinVisiting PhD StudentFeb. 2024 - Jul. 2024

Honors & Awards

-

NeurIPS Scholar Award2024

-

Hong Kong PhD Fellowship2021

-

HKU Presidential PhD Scholar2021

-

Outstanding Graduate of Software School, Tsinghua University2020

-

Outstanding Bachelor Thesis Award, Fudan University2017

-

Outstanding Graduate of Shanghai, China2017

-

National Scholarship, China2015

Selected Publications (view all )

NeurIPS

NeurIPSFUDOKI: Discrete Flow-based Unified Understanding and Generation via Kinetic-Optimal Velocities

Jin Wang*, Yao Lai*, Aoxue Li, Shifeng Zhang, Jiacheng Sun, Zhenguo Li, Ping Luo (* equal contribution)

Conference on Neural Information Processing Systems (NeurIPS) 2025 Spotlight

FUDOKI is a novel unified multimodal model that replaces traditional autoregressive architectures with discrete flow matching, enabling more flexible and effective visual understanding and image generation with performance comparable to state-of-the-art models.

NeurIPS

NeurIPS

AAAI

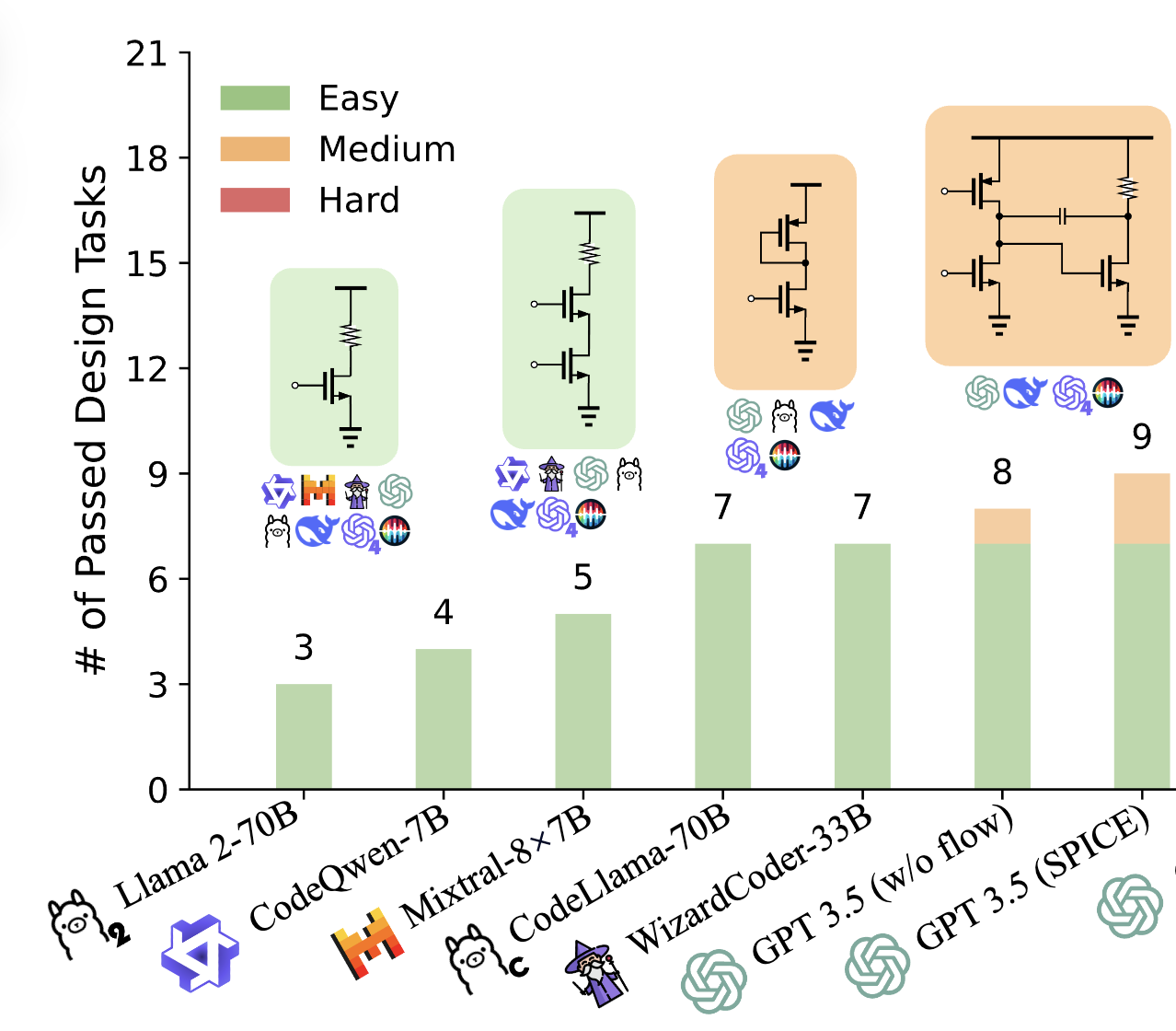

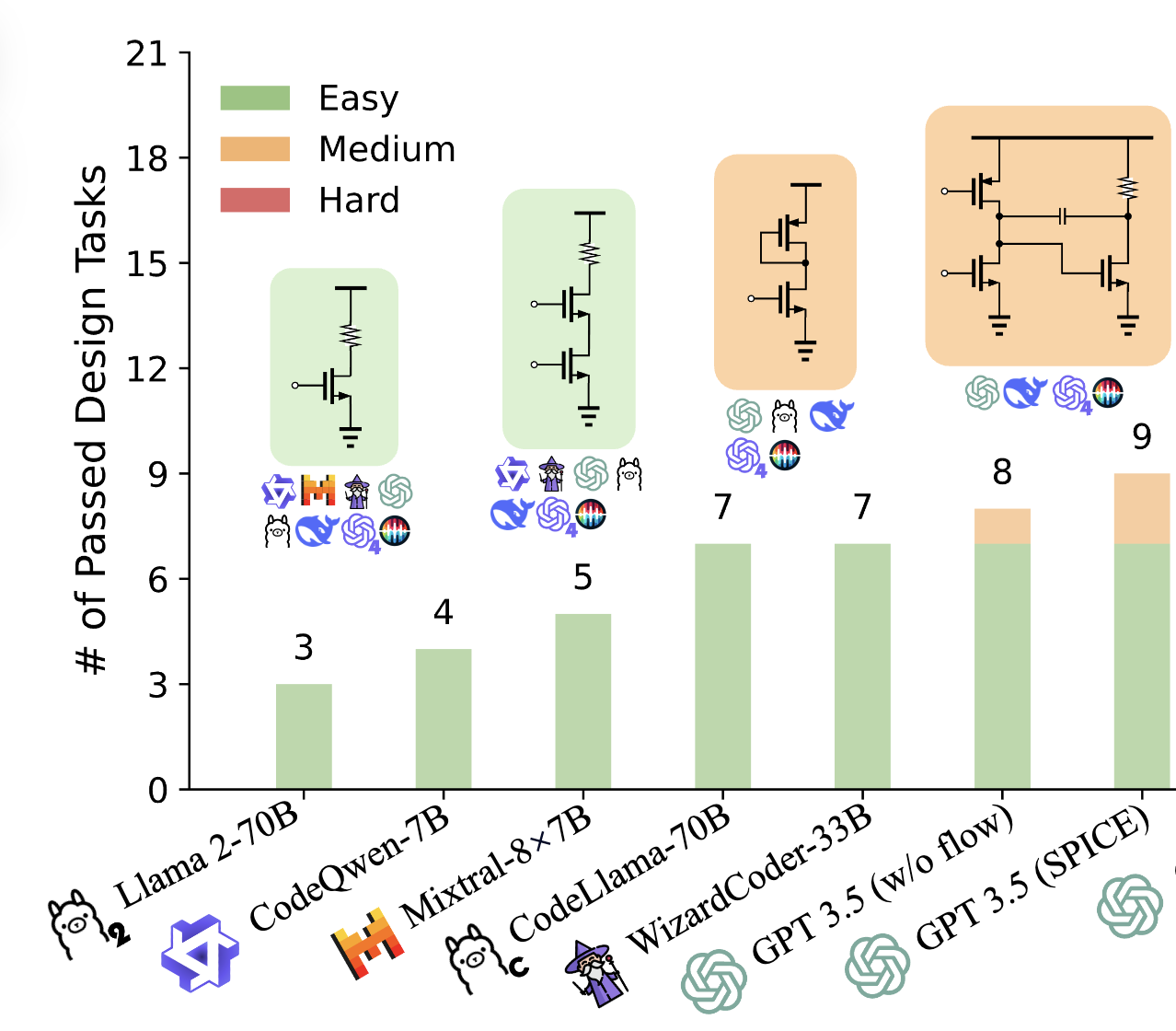

AAAIAnalogCoder: Analog Circuit Design via Training-Free Code Generation

Yao Lai, Sungyoung Lee, Guojin Chen, Souradip Poddar, Mengkang Hu, David Z. Pan, Ping Luo

AAAI Conference on Artificial Intelligence (AAAI) 2025 OralAAAI 2025 Top-15 Influential Papers (Rank 11)

AnalogCoder is a training-free LLM agent for analog circuit design, using feedback-driven prompts and a circuit library to achieve high success rates, outperforming GPT-4o by designing various circuits.

AAAI

AAAI

NeurIPS

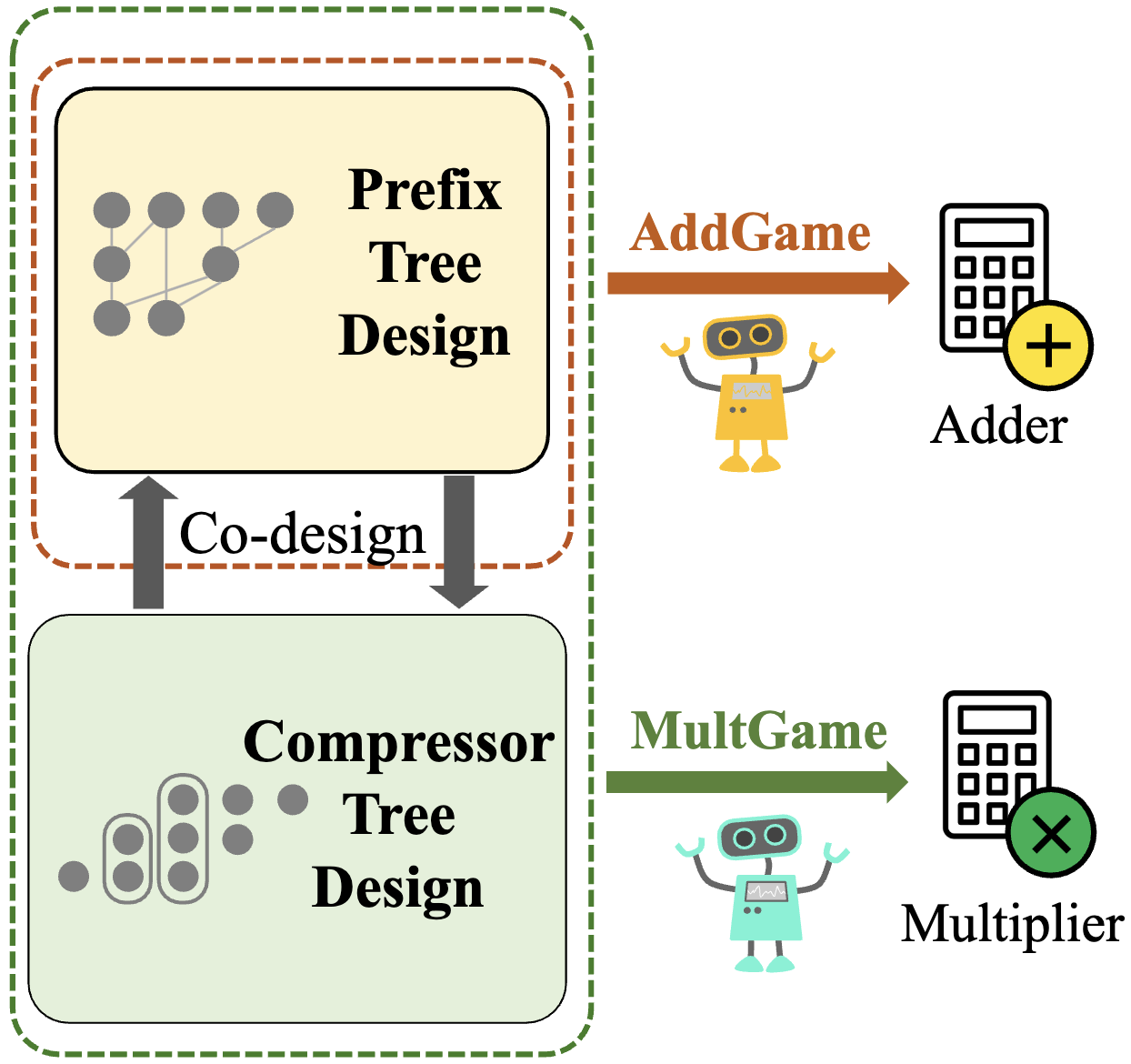

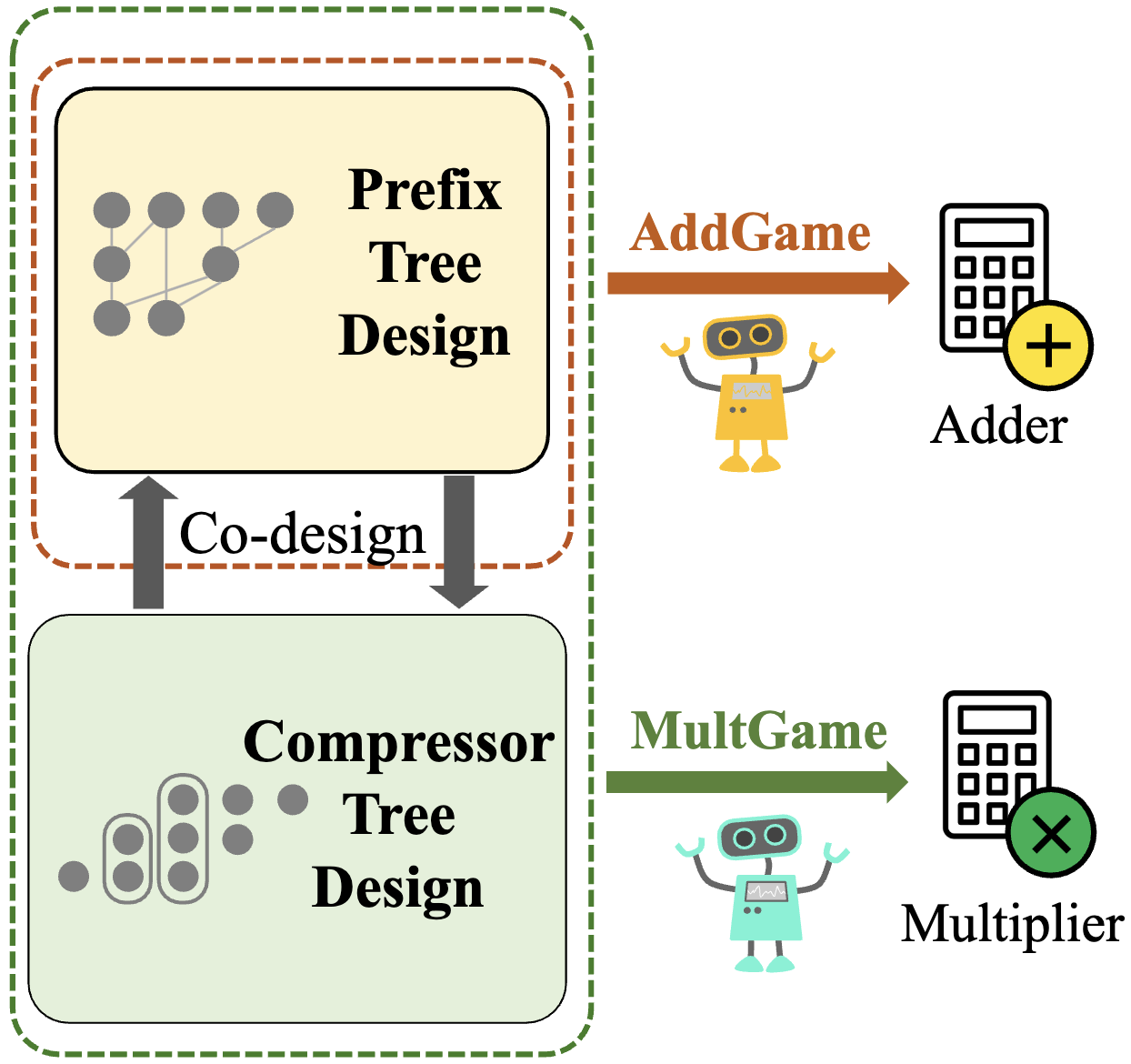

NeurIPSScalable and Effective Arithmetic Tree Generation for Adder and Multiplier Designs

Yao Lai, Jinxin Liu, David Z. Pan, Ping Luo

Conference on Neural Information Processing Systems (NeurIPS) 2024 Spotlight

This work uses reinforcement learning to optimize adder and multiplier designs as tree generation tasks, achieving up to 49% faster speed and 45% smaller size, with scalability to 7nm technology.

NeurIPS

NeurIPS

ICML

ICMLChiPFormer: Transferable Chip Placement via Offline Decision Transformer

Yao Lai, Jinxin Liu, Zhentao Tang, Bin Wang, Jianye Hao, Ping Luo

International Conference on Machine Learning (ICML) 2023

ChiPFormer is an offline RL-based method that achieves 10x faster chip placement with superior quality and transferability to unseen circuits.

ICML

ICML

NeurIPS

NeurIPSMaskPlace: Fast Chip Placement via Reinforced Visual Representation Learning

Yao Lai, Yao Mu, Ping Luo

Conference on Neural Information Processing Systems (NeurIPS) 2022 Spotlight

MaskPlace is a method that leverages pixel-level visual representation for chip placement, achieving superior performance with simpler rewards, 60%-90% wirelength reduction, and zero overlaps.

NeurIPS

NeurIPS